AWS Bedrock Reusable Prompts

If you are sending your model big system prompts or, even more likely, tool call definitions with every request you make, that is likely slow and not cost-effective.

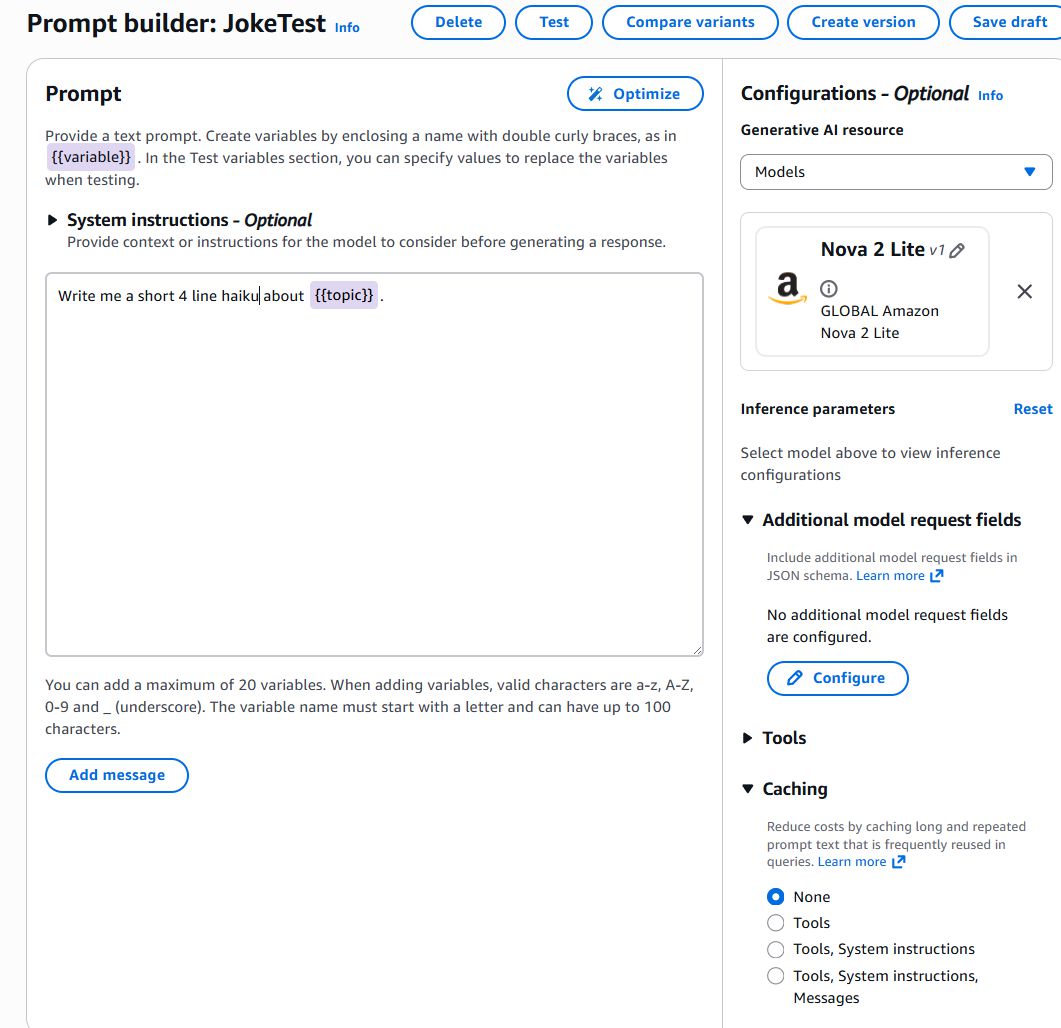

AWS Bedrock gives you a way to create Reusable Prompts, which you can pass in a few key variables that will change the outcome.

As a test, I created a Reusable Prompt: Write me a short 4-line haiku about {{topic}}.

Then I simply passed the model just the topic, in this case, for my Wisconsin people, I passed it the word “Cheese”.

It returned the following result

Cheese Haiku

Creamy whispers soft,

Aged in caves, sharp dreams alight,

Joy on every bite.

Not exactly Shakespeare, but it did the trick. This was a super small example, but at scale, if you have tens of thousands of input tokens you are sending in every request, then this could save on network throughput over time and speed up your requests. An added security bonus is that you can encrypt your prompts with KMS in case there is something proprietary in there.

While I didn’t find anything directly related to savings with reusable prompts, it does allow for Prompt Caching, which you can turn on by default when you build the reusable prompt. For this purpose, I hope they don’t limit the TTL to 5 minutes, as you would want the cache (and the 75% discount on input tokens) to be in effect for every invocation of the Reusable Prompt, but I don’t believe that to be the case.

So let me ask you: Do you have any other cost-saving tips for using AI/ML on AWS?